We're recruiting PhDs (2025/2026 intake) & Researcher (June/Sep 2025/2026 start) to pioneer AI-Scientist, LLM/VLM, and Multi-Agent Self-evolution.

👉 Contact now with your CV + research vision: zhangbo@pjlab.org.cn & bo.zhangzx@gmail.com

🔥 Highlighted Projects

-

NovelSeek. (End-to-end Auto-research Framework that has demonstrated its versatility across 12 scientific research tasks.) [Project][Technical report]

-

MinerU and PDF-Extract-Kit. (A popular open-source tool, converting PDFs into machine-readable formats (e.g., markdown, JSON), allowing for easy extraction into any format.) [Project][Technical report]

-

InternVL 1.5 and InternVL 2. (Rank 1st among open-source VLM models on MMMU, DocVQA, ChartQA, and MathVista.) [Project][Technical report]

-

3DTrans (Work during the PhD period). (An Open-source Codebase for Continuous Learning towards Autonomous Driving Task, including Unsupervised Domain Adaptation (UDA), Active Domain Adaptation (ADA), Semi-Supervised Domain Adaptation (SSDA), and Multi-dateset Domain Fusion (MDF) tasks.) [Project][Technical report]

🌎 News

2025:

-

2025.06: SPOT has been accepted as a Regular Paper in Transactions on Pattern Analysis and Machine Intelligence.</a>

-

2025.06: Three papers accepted to ICCV 2025: Chimera, Lumina-Image 2.0, TOP

-

2025.05: 🔥🔥🎉🎉 When Agent Becomes the Scientist: Your ultimate AI-powered Scientist for finding, analyzing, and experimentation like never before! NovelSeek Page

-

2025.05: 🎉🎉 SurveyForge and Dolphin are accepted by ACL-2025.

-

2025.05: MME-CoT is accepted by ICML-2025.

-

2025.02: 🎉🎉 Three papers are accepted by CVPR-2025: JiSAM, OmniDocBench, CDM.

-

2025.01: One of our papers has been accepted for publication in TPAMI, another has been accepted by TGRS.

-

2025.01: 🎉🎉 Two papers accepted to ICLR 2025: GeoX, OmniCorpus

2024:

-

2024.10: 🎉🎉 Grateful for the heartfelt recognition and thoughtful sharing of my research work Fudan_CYL and Fudan_SIST .

-

2024.10: 🎉🎉 The technical report for MinerU with high table extraction ability (StructEqTable-Deploy), an open-source solution for high-precision document content extraction, has been published.

-

2024.09: Three papers accepted to NeurIPS 2024: AdaptiveDiffusion, ZOPP, LeapAD

-

2024.08: Bo Zhang was invited to serve as a PC member of AAAI 2025.

-

2024.08: We collaborated with the OpenDataLab team to open-source the PDF-Extract-Kit. It can extract high-quality and structured content from PDFs and has gained 6K+ stars.

-

2024.07: One paper (Reg-TTA3D) is accepted by ECCV 2024. We explore test-time adaptive 3d object detection for the first time.

-

2024.05: Our paper entitled "Cross-Task Linearity Emerges in the Pretraining-Finetuning Paradigm" is accepted for publication in ICML 2024.

-

2024.05: One paper (Expert Pruning-Skipping) is accepted by ACL 2024.

-

2024.02: One paper (Once for Both) is accepted by CVPR-2024.

-

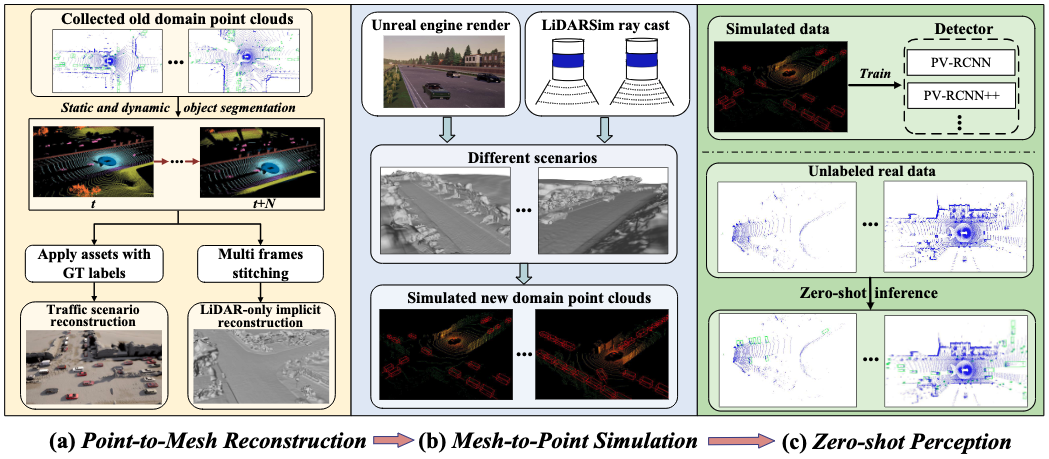

2024.01: One paper (ReSimAD) is accepted by ICLR 2024. We propose a zero-shot generalization framework by reconstructing mesh and simulating target point clouds.

-

2024.01: Two papers (IPNet and MVNet) are accepted by TCSVT.

2023:

-

2023.12: We have released the ChartX benchmark, covering 18 chart types, 7 chart tasks, 22 disciplinary topics to evaluate the chart-related capabilities of the existing MLLMS.

-

2023.09: SPOT, showing a promising and scalable 3D pre-training on autonomous driving, has been released.

-

2023.09: One paper entitled “AD-PT: Autonomous Driving Pre-Training with Large-scale Point Cloud Dataset” is accepted by NeurIPS-2023.

-

2023.08: One paper BFDA about cross-domain background-fouced alignment is accepted by TIP.

-

2023.07: One paper entitled "SUG: Single-dataset Unified Generalization for 3D Point Cloud Classification" is accepted by ACM MM-2023.

-

2023.04: One paper entitled "Performance-aware Approximation of Global Channel Pruning for Multitask CNNs" is accepted for publication in T-PAMI.

-

2023.03: 🎉🎉 Three papers are accepted by CVPR-2023: Uni3D, Bi3D, GDP.

-

2023.02: Bo Zhang started to work on exploring how to improve the problem-solving and reasoning ability of LLMs or VLMs for complicated modalities, including Chart, Table, Geometry, Scientific Document, by investigating foundation LLM models from the perspective of structured knowledge-rich data.

📝 Selected Publications

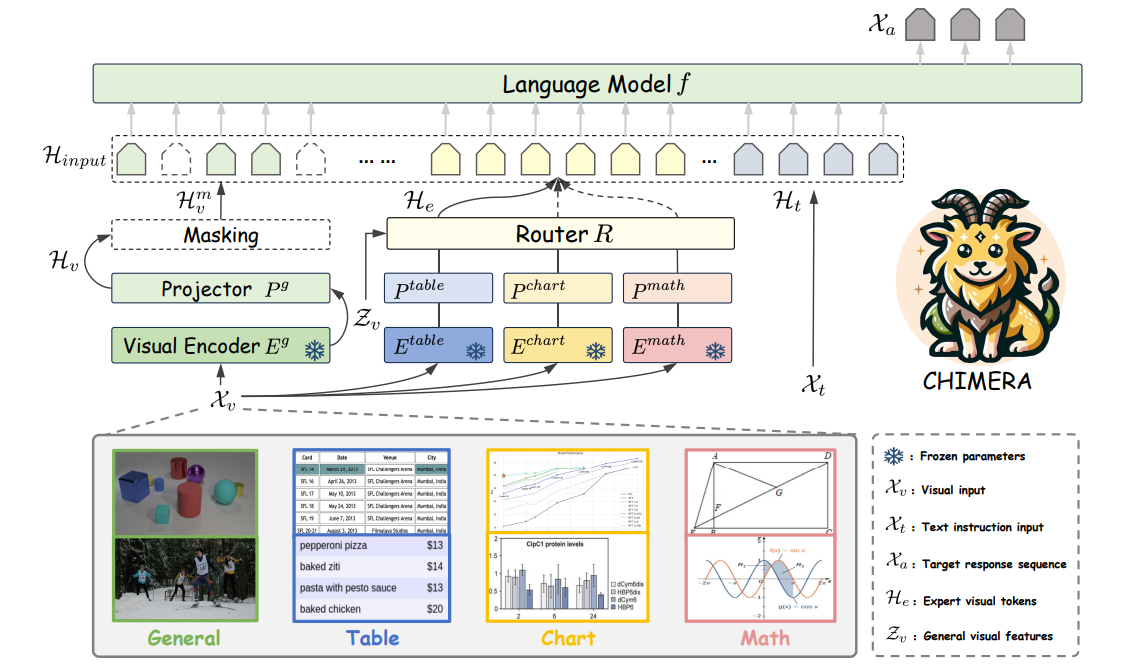

Chimera: Improving Generalist Model with Domain-Specific Experts

Tianshuo Peng, Mingsheng Li, Jiakang Yuan, Hongbin Zhou, Renqiu Xia, Renrui Zhang, Lei Bai, Song Mao, Bin Wang, Aojun Zhou, Botian Shi, Tao Chen, Bo Zhang^(corr.), Xiangyu Yue [Project][Models][Paper]

- We propose Chimera, a scalable pipeline that integrates specialist models into generalist LMMs, facilitating their adaptation to many specialized tasks.

- Chimera achieves SOTA performance on MathVista and MathVerse. Furthermore, it achieves near-specialist-level results in visual structural extraction on benchmarks like ChartQA-SE, Table-SE, Doc-SE.

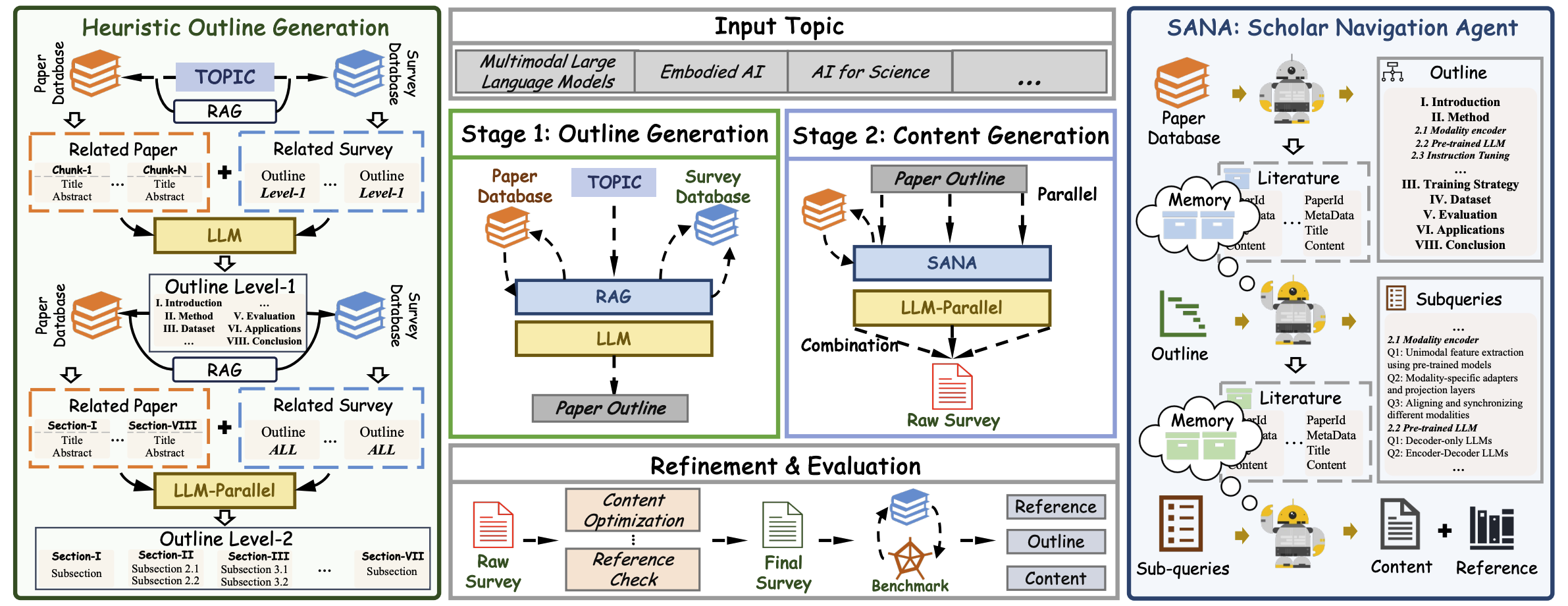

Xiangchao Yan, Shiyang Feng, Jiakang Yuan, Renqiu Xia, Bin Wang, Lei Bai, Bo Zhang^(corr.) [Project][Benchmark][Paper]

- We propose SurveyForge, a novel automated framework for generating high-quality academic survey papers

- We propose a heuristic outline generation method and a memory-driven scholar navigation agent

- To facilitate objective evaluation, we establish SurveyBench, to assess outline, reference, and content quality

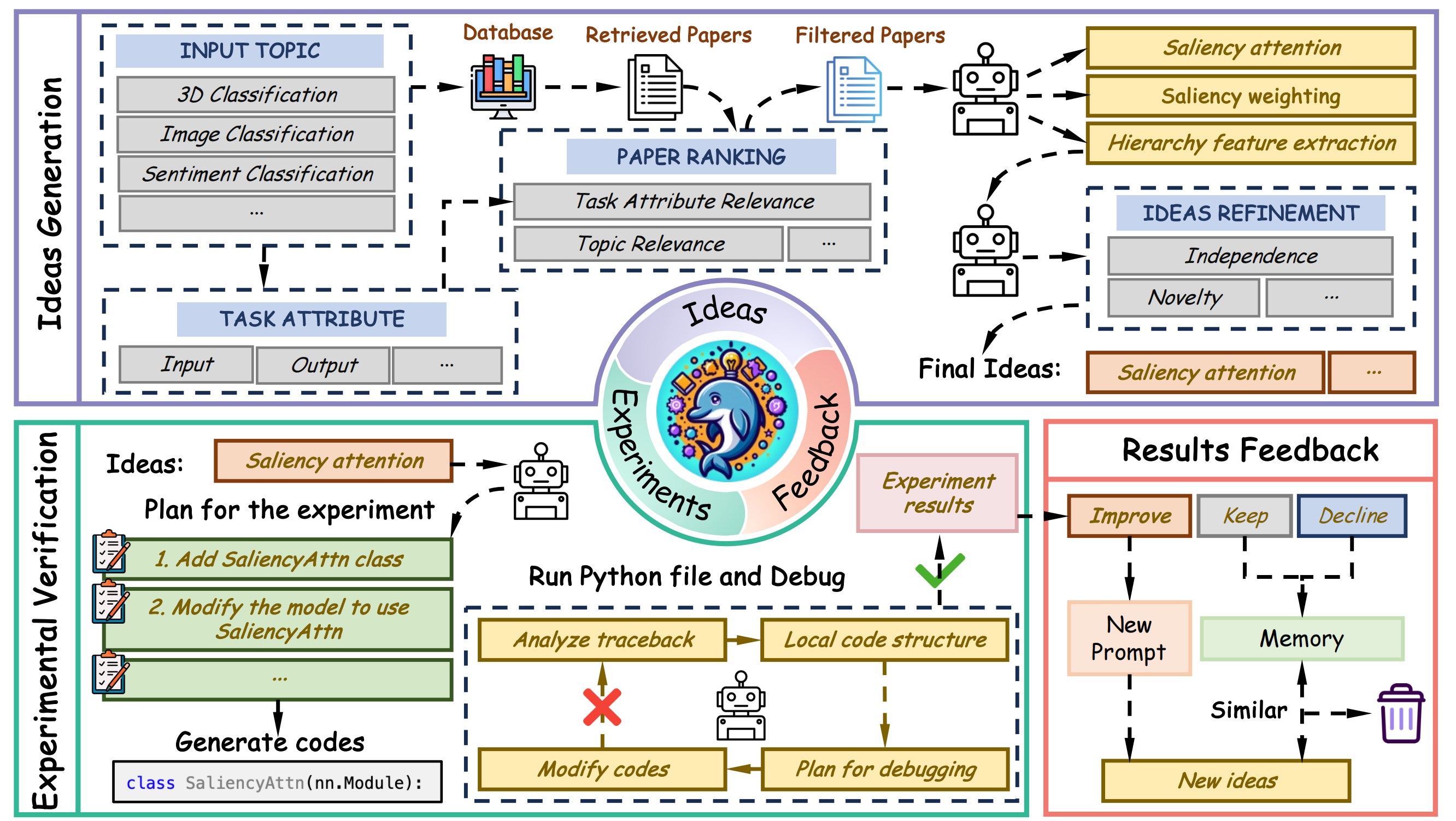

Dolphin: Moving Towards Closed-loop Auto-research through Thinking, Practice, and Feedback

Jiakang Yuan, Xiangchao Yan, Shiyang Feng, Bo Zhang^(corr.), Tao Chen, Botian Shi, Wanli Ouyang, Yu Qiao, Lei Bai, Bowen Zhou [Project][Paper]

- we propose task-attribute-guided paper ranking and exception-traceback-guided debugging process to improve the quality of generated ideas and the successful rate of code execution.

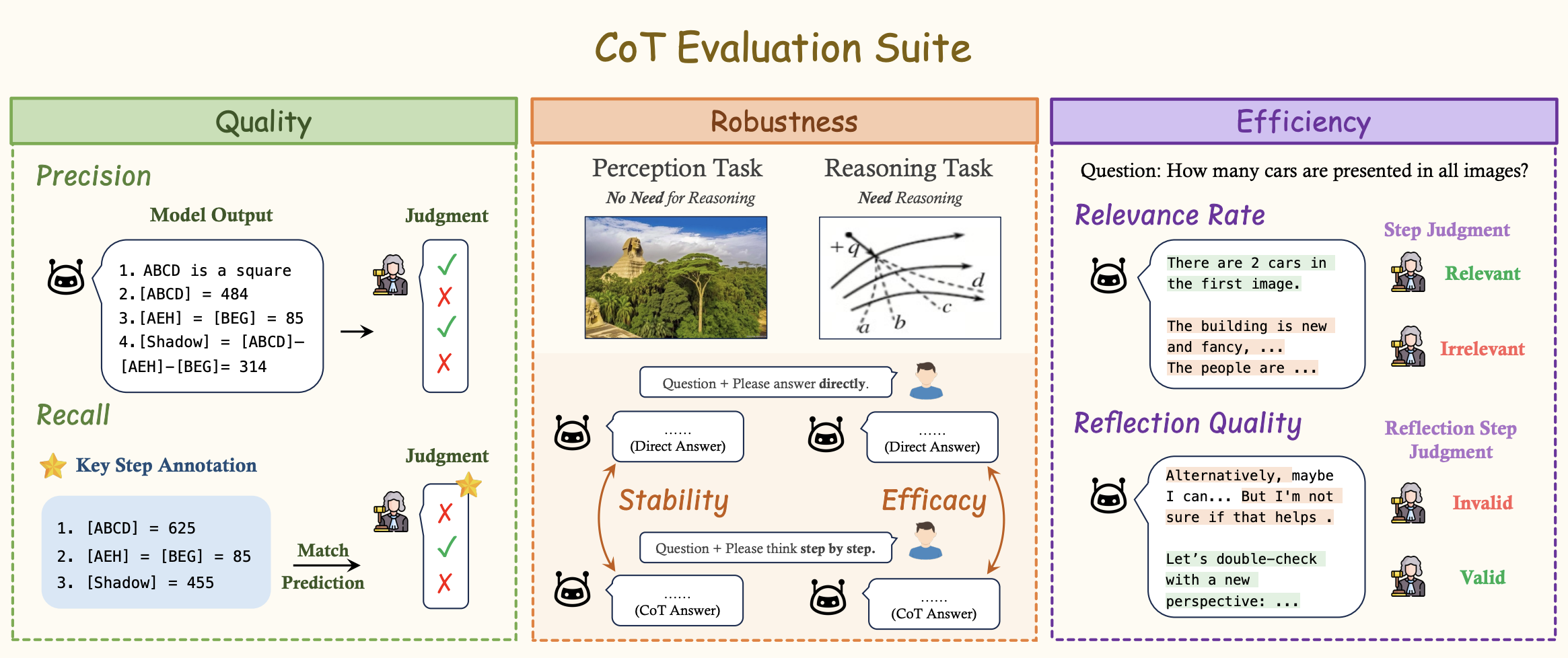

Dongzhi Jiang, Renrui Zhang, Ziyu Guo, Yanwei Li, Yu Qi, Xinyan Chen, Liuhui Wang, Jianhan Jin, Claire Guo, Shen Yan, Bo Zhang, Chaoyou Fu, Peng Gao, Hongsheng Li [Project][Paper]

- We introduce MME-CoT, a specialized benchmark evaluating the CoT reasoning performance of LMMs

- MME-CoT covers six domains: math science, OCR, logic, space-time, and general scenes.

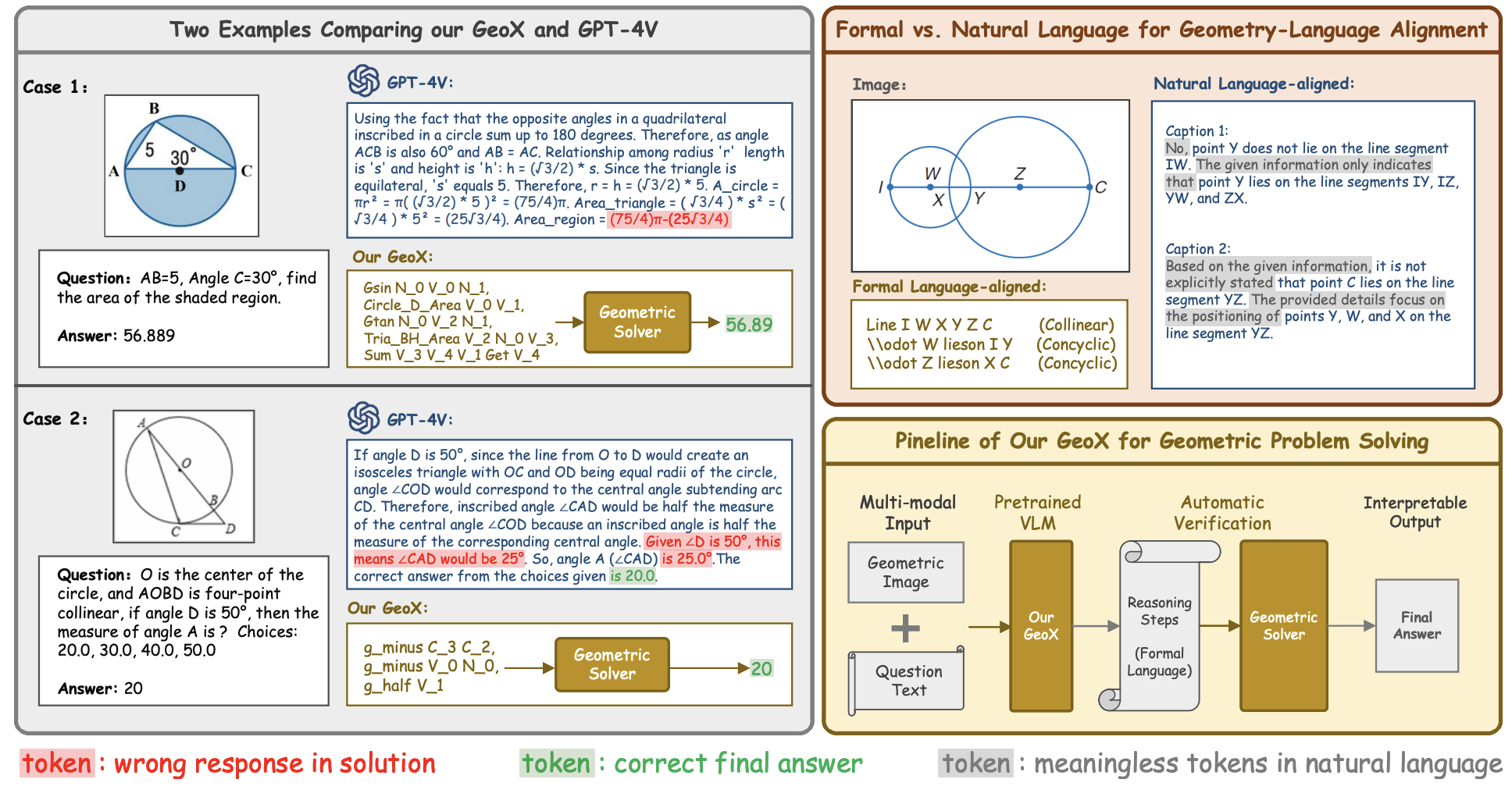

GeoX: Geometric Problem Solving Through Unified Formalized Vision-Language Pre-training

Renqiu Xia, Mingsheng Li, Hancheng Ye, Wenjie Wu, Hongbin Zhou, Jiakang Yuan, Tianshuo Peng, Xinyu Cai, Xiangchao Yan, Bin Wang, Conghui He, Botian Shi, Tao Chen, Junchi Yan, Bo Zhang^(corr.)

- Our study reveals the large potential of formalized visual-language pre-training in enhancing geometric problem-solving abilities. To enable the formalized pre-training, we propose GeoX, aiming to build geometric generalist models by modeling geometric tasks into a unified formulation.

- We propose a Generator-And-Sampler Transformer (GS-Former) to generate discriminative queries and eliminate uninformative representations from unevenly distributed geometric signals.

OmniCorpus: A Unified Multimodal Corpus of 10 Billion-Level Images Interleaved with Text

Qingyun Li, Zhe Chen, Weiyun Wang, Wenhai Wang, Shenglong Ye, Zhenjiang Jin, Guanzhou Chen, Yinan He, Zhangwei Gao, Erfei Cui, Jiashuo Yu, Hao Tian, Jiasheng Zhou, Chao Xu, Bin Wang, Xingjian Wei, Wei Li, Wenjian Zhang, Bo Zhang, Pinlong Cai, Licheng Wen, Xiangchao Yan, Zhenxiang Li, Pei Chu, Yi Wang, Min Dou, Changyao Tian, Xizhou Zhu, Lewei Lu, Yushi Chen, Junjun He, Zhongying Tu, Tong Lu, Yali Wang, Limin Wang, Dahua Lin, Yu Qiao, Botian Shi, Conghui He, Jifeng Dai

- We filter and extract large-scale high-quality documents, which contain 8.6 billion images and 1,696 billion tet tokens.

- Our dataset is 15 times larger with high quality, features diverse sources (including English, non-English, and video-centric websites), and offers flexibility to adapt from an image-text interleaved format to pure text or image-text pairs.

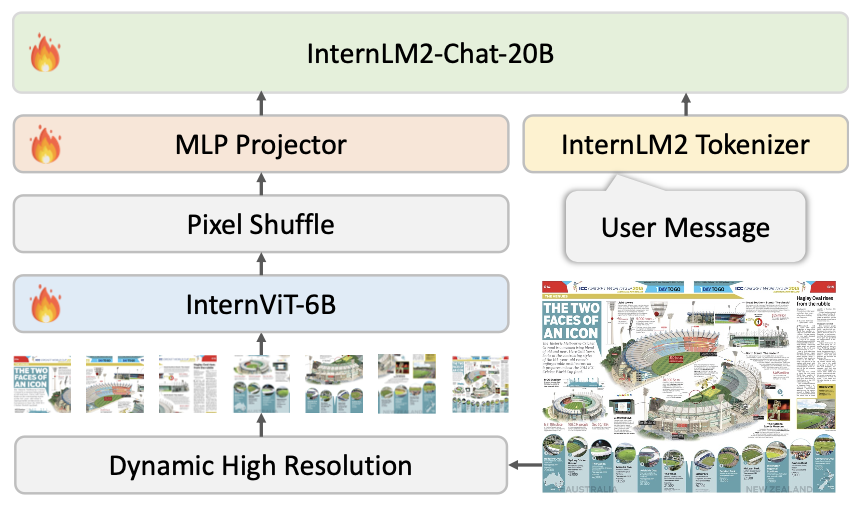

How Far Are We to GPT-4V? Closing the Gap to Commercial Multimodal Models with Open-Source Suites

Zhe Chen, Weiyun Wang, Hao Tian, Shenglong Ye, Zhangwei Gao, Erfei Cui, Wenwen Tong, Kongzhi Hu, Jiapeng Luo, Zheng Ma, Ji Ma, Jiaqi Wang, Xiaoyi Dong, Hang Yan, Hewei Guo, Conghui He, Botian Shi, Zhenjiang Jin, Chao Xu, Bin Wang, Xingjian Wei, Wei Li, Wenjian Zhang, Bo Zhang, Pinlong Cai, Licheng Wen, Xiangchao Yan, Min Dou, Lewei Lu, Xizhou Zhu, Tong Lu, Dahua Lin, Yu Qiao, Jifeng Dai, Wenhai Wang

- Propose InternVL 1.5 and InternVL 2. (Rank 1st among open-source VLM models on MMMU, DocVQA, ChartQA, and MathVista.)

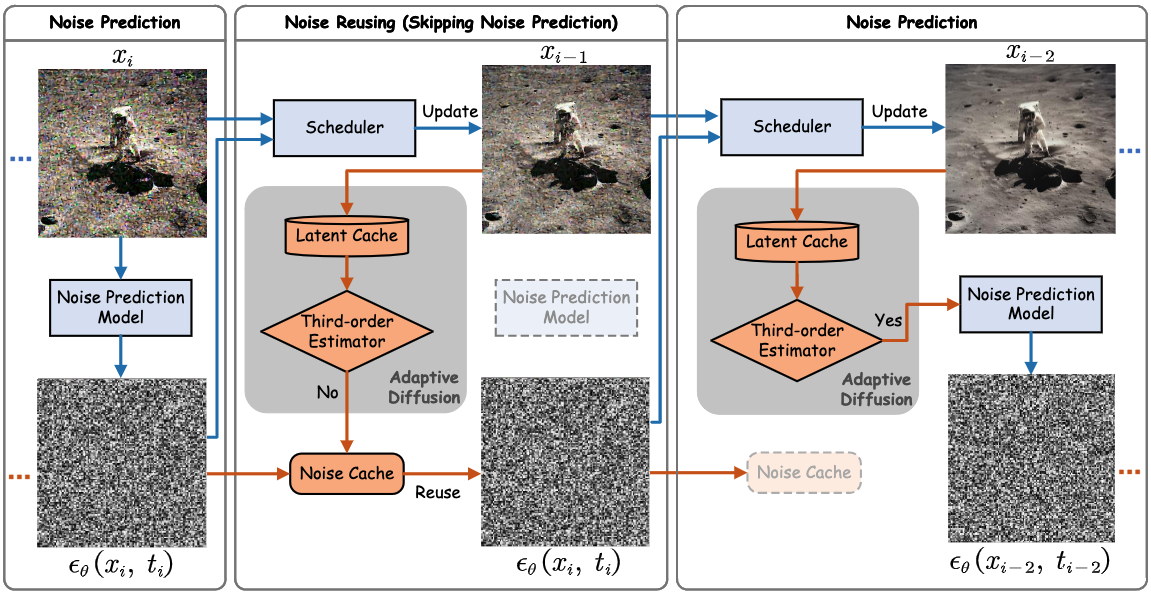

Training-Free Adaptive Diffusion with Bounded Difference Approximation Strategy

Hancheng Ye, Jiakang Yuan, Renqiu Xia, Xiangchao Yan, Tao Chen, Junchi Yan, Botian Shi, Bo Zhang^(corr.)

- Propose AdaptiveDiffusion to adaptively reduce the noise prediction steps during the denoising proces guided by the third-order latent difference.

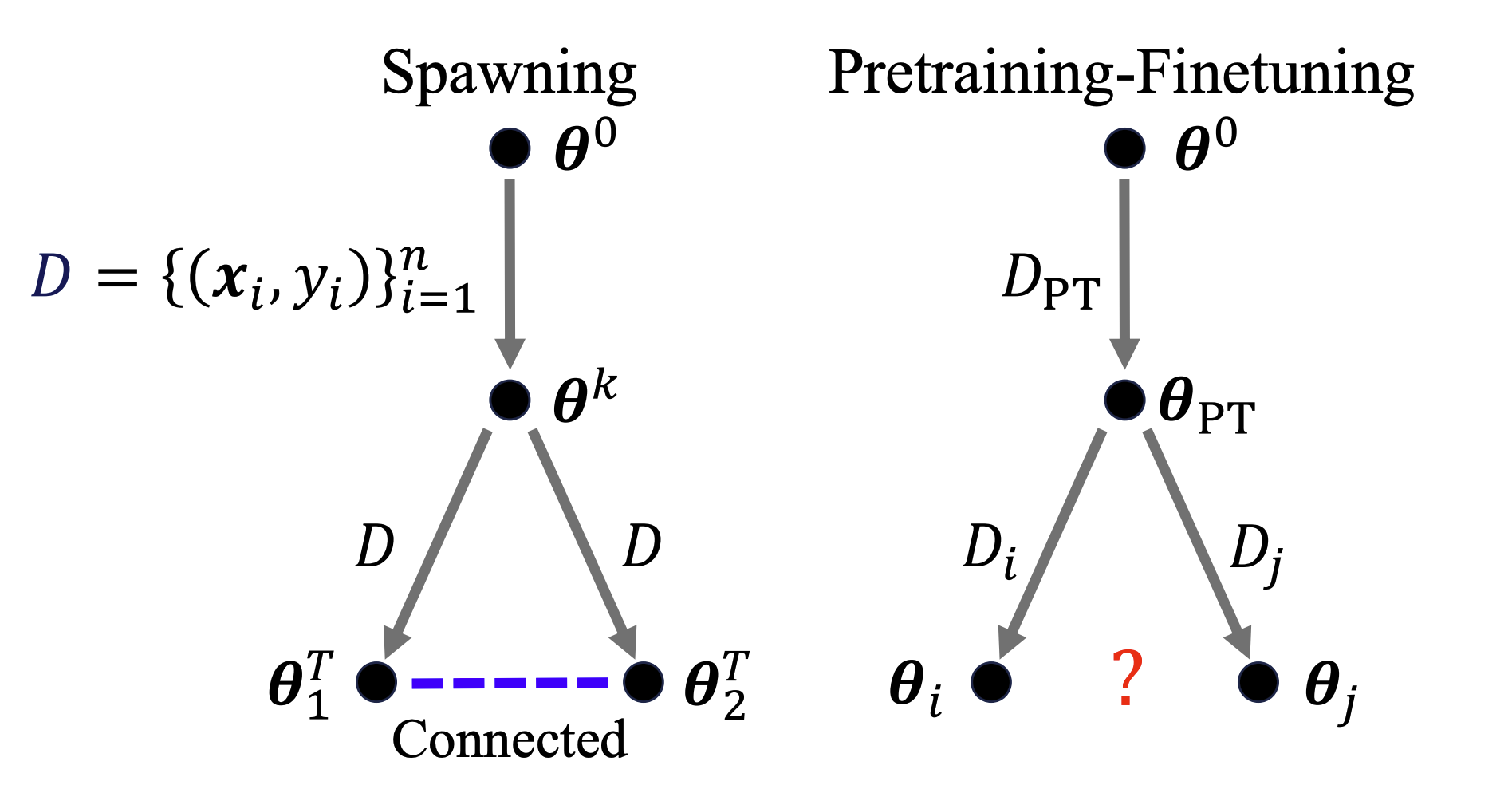

On the Emergence of Cross-Task Linearity in the Pretraining-Finetuning Paradigm

Zhanpeng Zhou, Zijun Chen, Yilan Chen, Bo Zhang^(corr.), Junchi Yan

- We discover an intriguing linear phenomenon in models that are initialized from a common pretrained checkpoint and finetuned on different tasks, termed as Cross-Task Linearity (CTL).

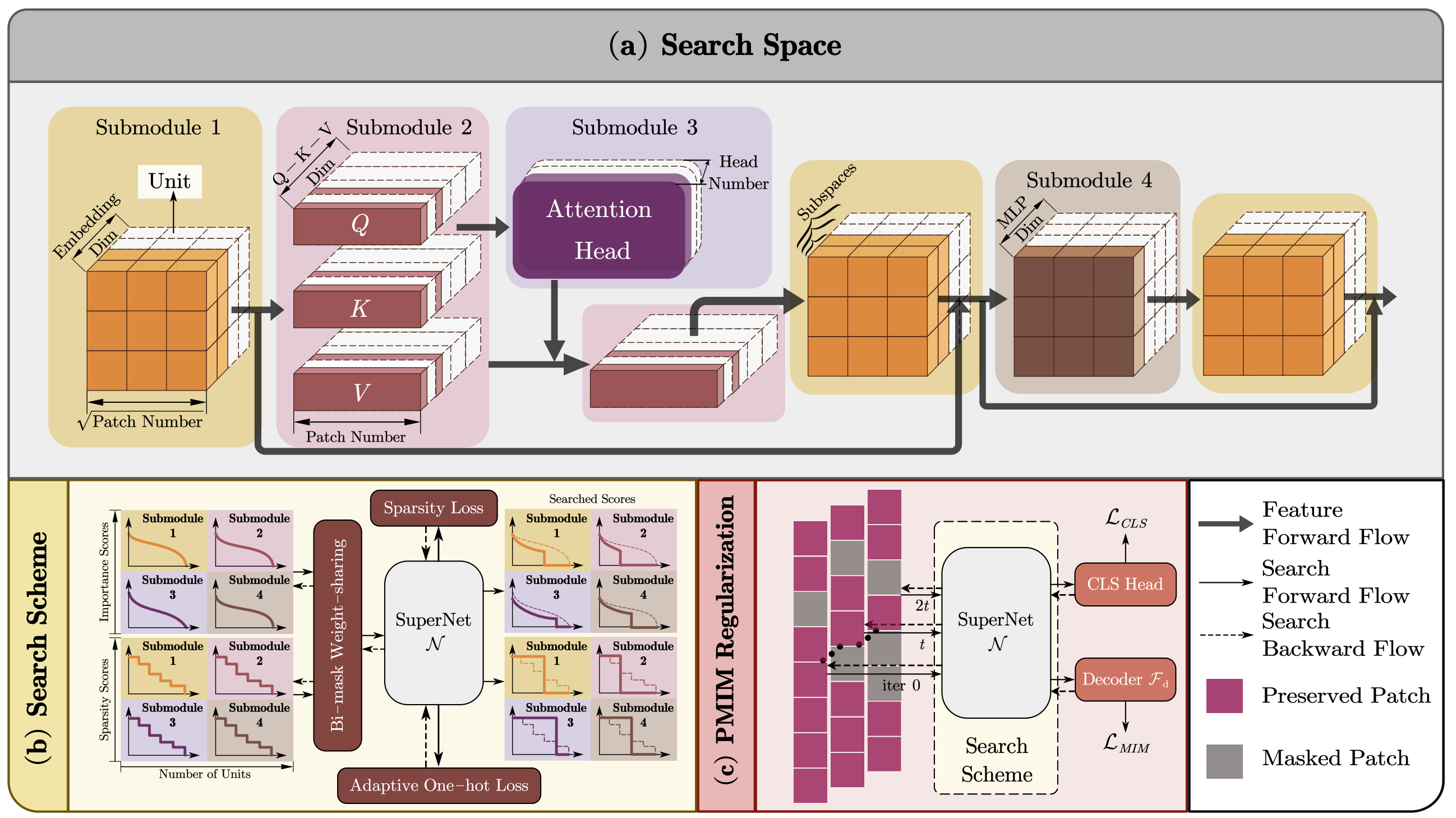

Once for Both: Single Stage of Importance and Sparsity Search for Vision Transformer Compression

Hancheng Ye, Chong Yu, Peng Ye, Renqiu Xia, Yansong Tang, Jiwen Lu, Tao Chen, Bo Zhang^(corr.)

- We investigate how to integrate the evaluations of importance and sparsity scores into a single stage, searching the optimal subnets in an efficient manner.

- We present OFB, a cost-efficient approach that simultaneously evaluates both importance and sparsity scores, termed Once for Both (OFB), for Vision Transformer Compression (VTC) task.

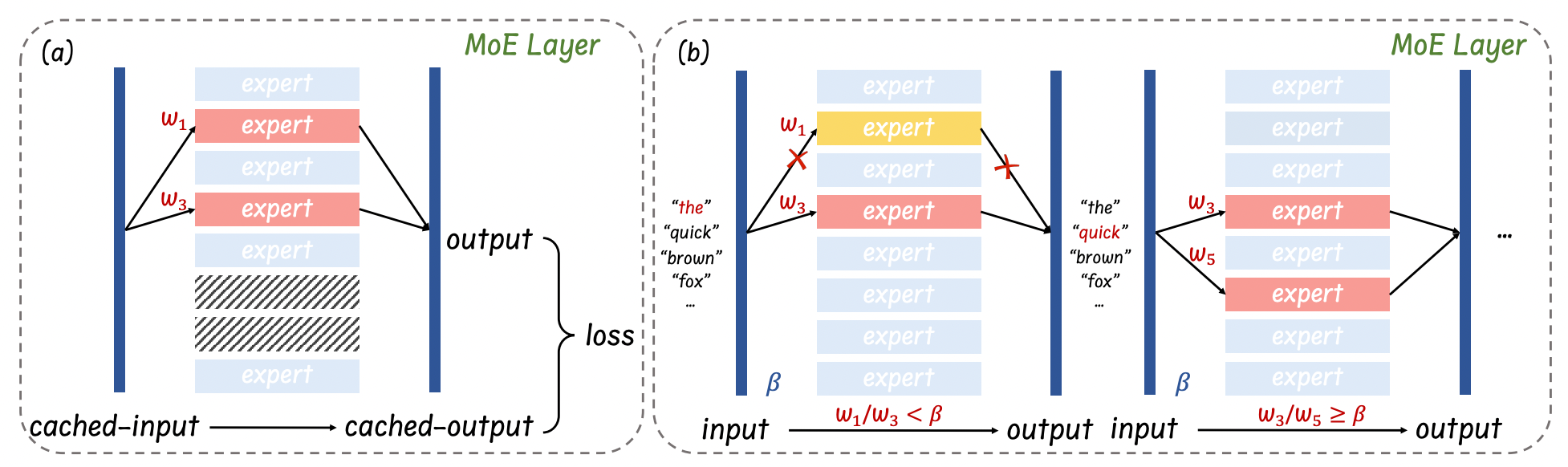

Xudong Lu, Qi Liu, Yuhui Xu, Aojun Zhou, Siyuan Huang, Bo Zhang, Junchi Yan, Hongsheng Li

- Different from previous weight pruning methods that rely on specifically designed hardware, this paper mainly aims to enhance the deployment efficiency of MoE LLMs by introducing plug-and-play expert-level sparsification techniques.

- We present to post-training approaches for task-agnostic and task-specific expert pruning and skipping of MoE LLM.

Bo Zhang, Xinyu Cai, Jiakang Yuan, Donglin Yang, Jianfei Guo, Xiangchao Yan, Renqiu Xia, Botian Shi, Min Dou, Tao Chen, Si Liu, Junchi Yan, Yu Qiao

- Provide a new perspective and approach of alleviating the domain shifts, by proposing a Reconstruction-Simulation-Perception scheme.

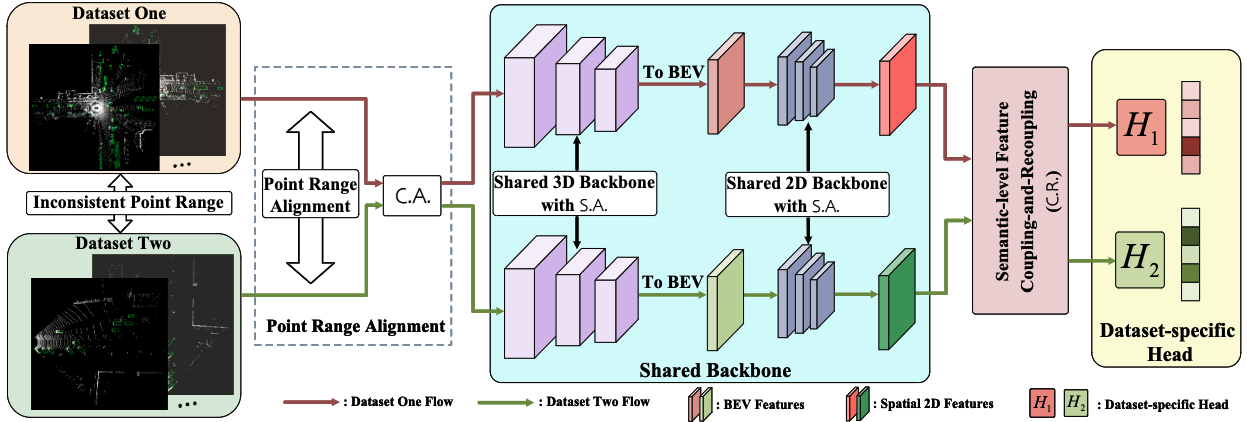

Uni3D: A Unified Baseline for Multi-dataset 3D Object Detection

Bo Zhang, Jiakang Yuan, Botian Shi, Tao Chen, Yikang Li, Yu Qiao

- Present a Uni3D which tackle multi-dataset 3D object detection from data-level and semantic-level.

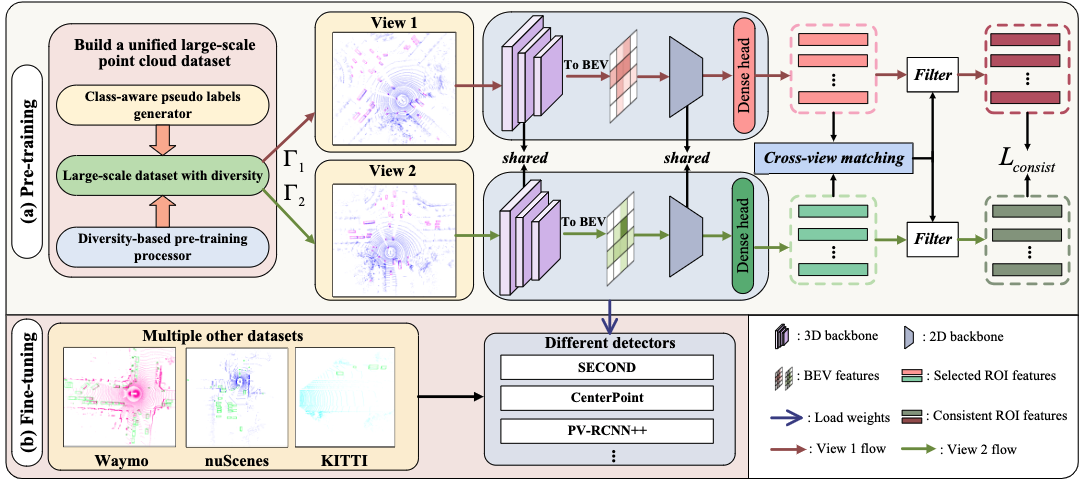

AD-PT: Autonomous Driving Pre-Training with Large-scale Point Cloud Dataset

Jiakang Yuan, Bo Zhang^(corr.), Xiangchao Yan, Tao Chen, Botian Shi, Yikang Li, Yu Qiao

- Build a large-scale pre-training point-cloud dataset with diverse data distribution, and meanwhile learn generalizable representations.

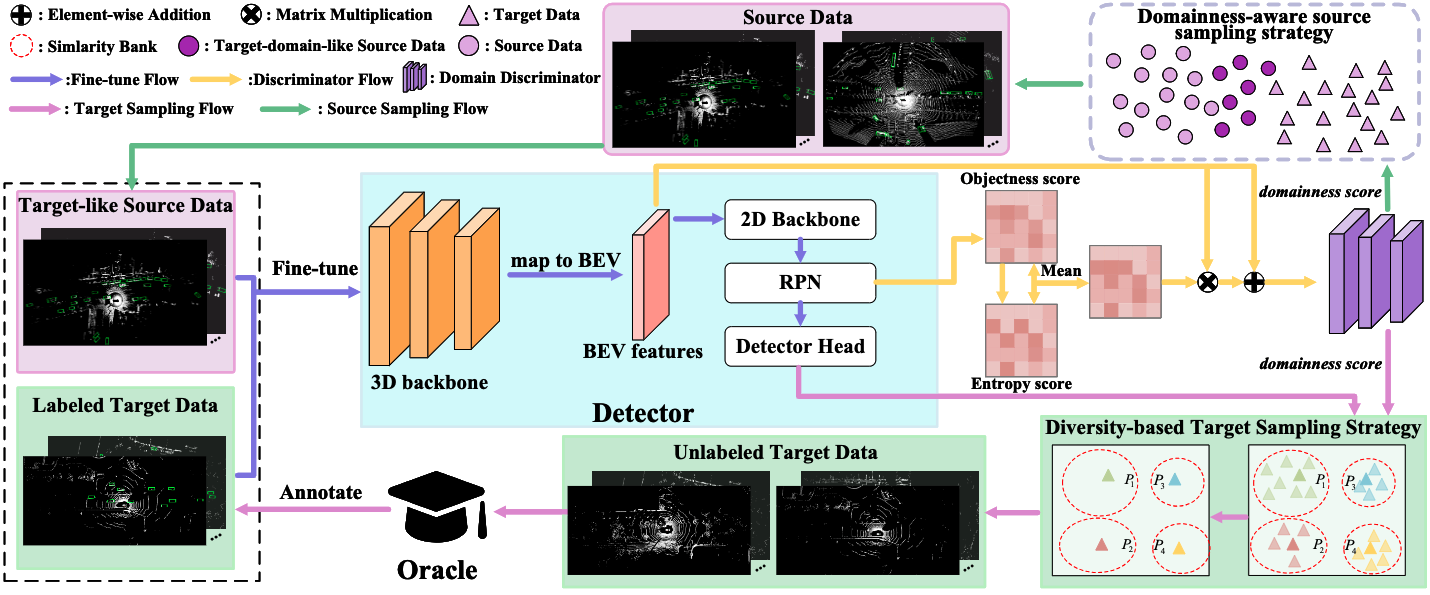

Bi3D: Bi-domain Active Learning for Cross-domain 3D Object Detection

Jiakang Yuan, Bo Zhang^(corr.), Xiangchao Yan, Tao Chen, Botian Shi, Yikang Li, Yu Qiao

- Propose a Bi-domain active learning approach which select samples from both source and target domain to solve the cross-domain 3D object detection task.

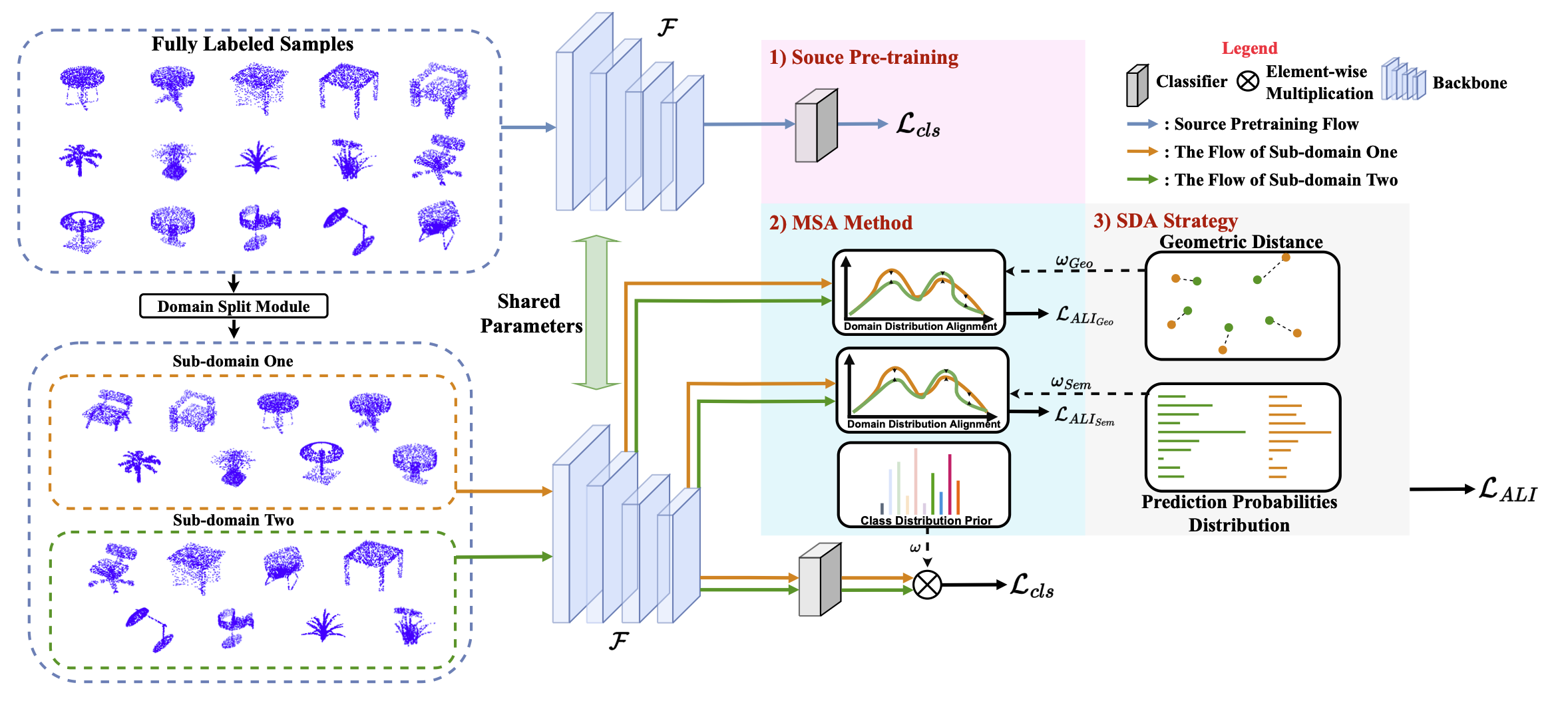

SUG: Single-dataset Unified Generalization for 3D Point Cloud Classification

Siyuan Huang, Bo Zhang^(corr.), Botian Shi, Peng Gao, Yikang Li, Hongsheng Li

- Propose a Single-dataset Unified Generalization (SUG) framework that only leverages a single source dataset to alleviate the unforeseen domain differences faced by a well-trained source model. .

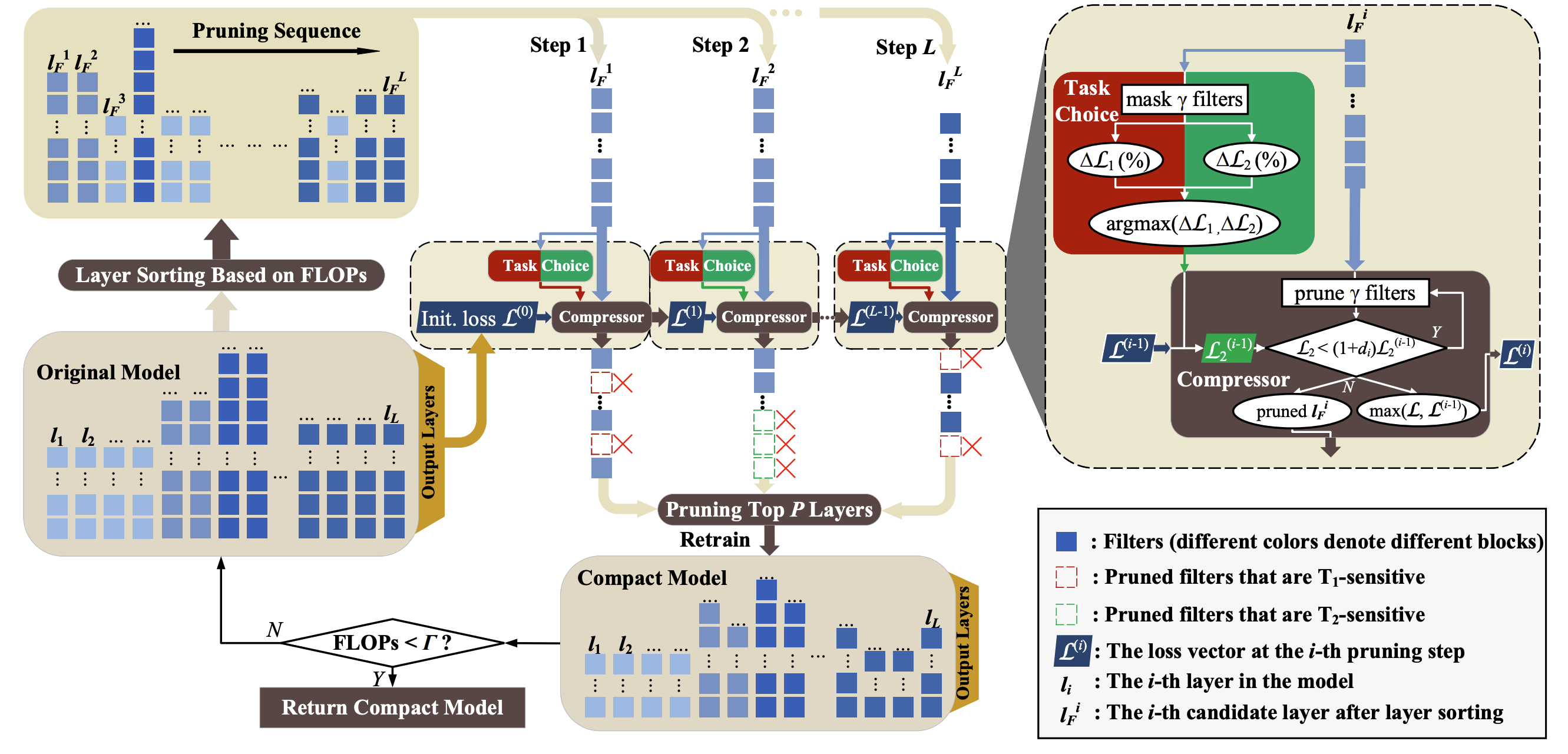

Performance-aware Approximation of Global Channel Pruning for Multitask CNNs

Hancheng Ye, Bo Zhang, Tao Chen, Jiayuan Fan, Bin Wang

- We propose a Performance-Aware Global Channel Pruning (PAGCP) framework. We first theoretically present the objective for achieving superior GCP, by considering the joint saliency of filters from intra- and inter-layers.

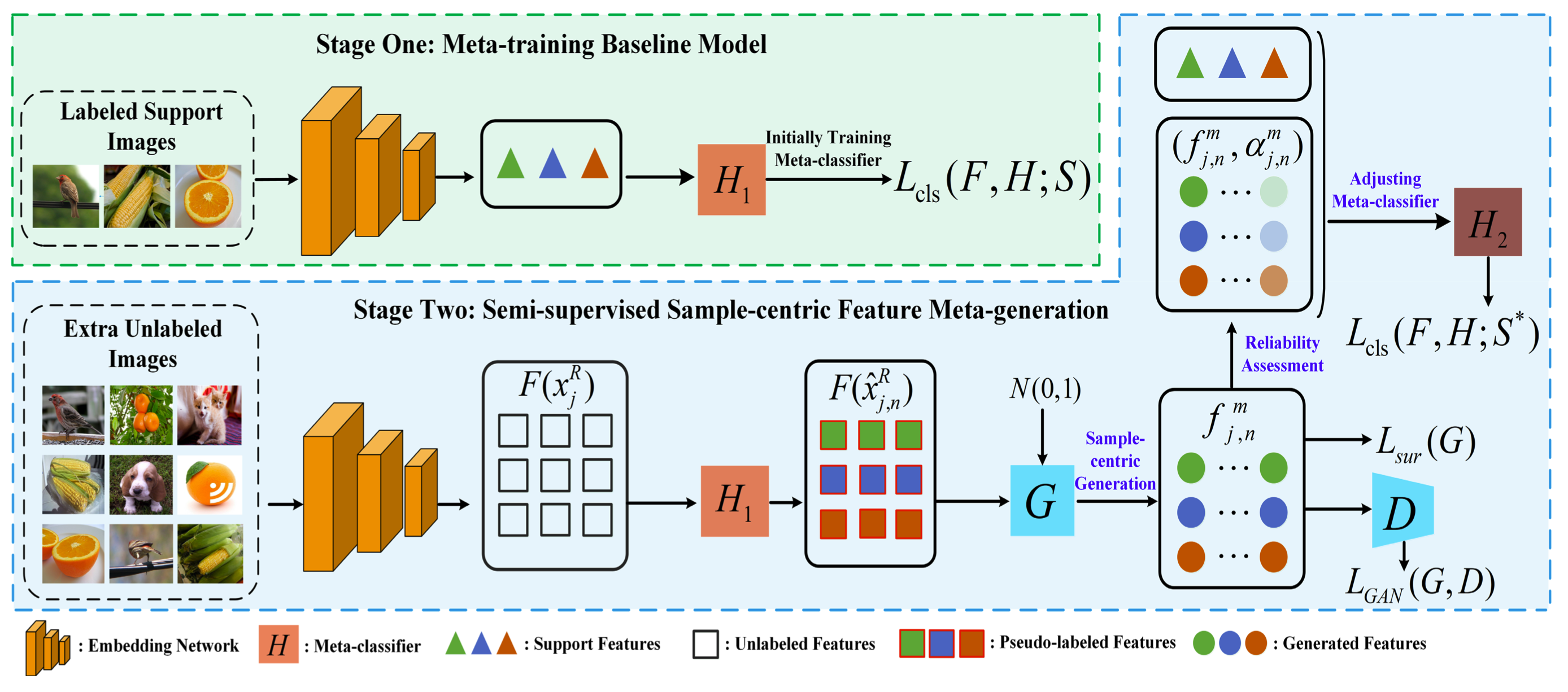

Sample-Centric Feature Generation for Semi-Supervised Few-Shot Learning

Bo Zhang, Hancheng Ye, Gang Yu, Bin Wang, Yike Wu, Jiayuan Fan, Tao Chen

- Propose a sample-centric feature generation (SFG) approach for semi-supervised few-shot image classification.

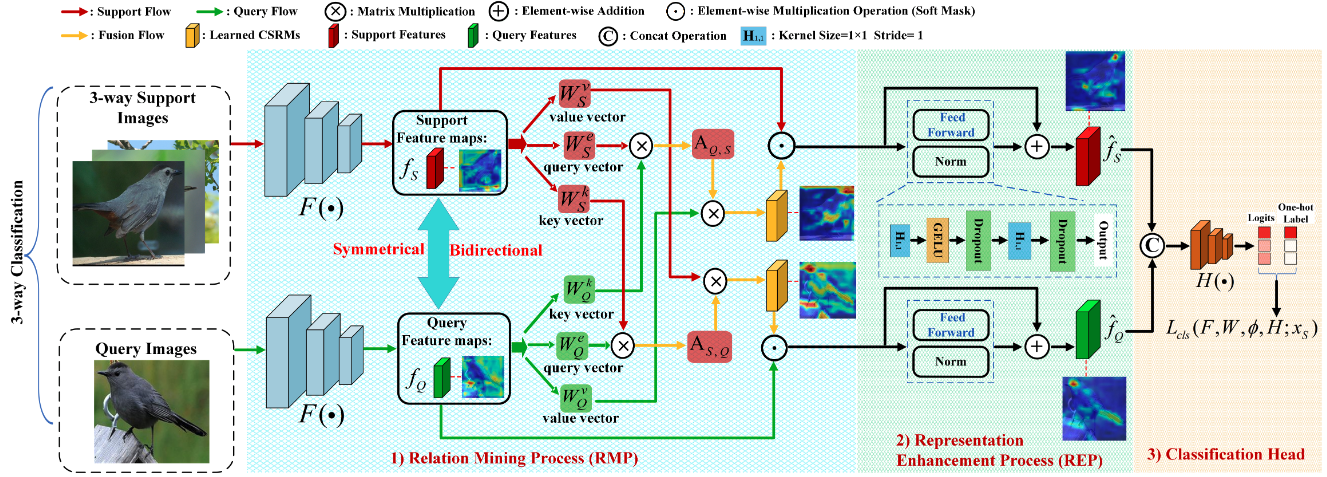

Bo Zhang, Jiakang Yuan, Baopu Li, Tao Chen, Jiayuan Fan, Botian Shi

- Propose a Transformer-based double-helix model to achieve the cross-image object semantic relation mining in a bidirectional and symmetrical manner.

💬 Invited Talks

- 2024.07, Invited talk of Multimodal Large Model Summit. [Video]

- 2023.09, Invited talk of Effcient Pre-training of Autonomous Driving. [Video]

- 2023.07, Invited talk of Towards 3D General Representation at Techbeat. [Video]

- 2023.03, Invited talk of Transferable Pwerception of Autonomous Driving. [Video]

💻 Internships

- 2021.12 - 2022.06, Shanghai AI Laboratory, China.

🎓 Ph.D Thesis

During his Ph.D. studies, Bo Zhang dedicated himself to advancing domain-adaptive models including 2D/3D domains. With a strong foundation in both theoretical research and practical applications, he has gained extensive expertise in model adaptation and continuous learning.